An introduction to Semiconductors (Part 2)

In Part 1 of this series, readers were introduced to the history of the transistor and integrated circuit. Part 2 will cover the microprocessor, memory and Intel's co-founder Gordon Moore.

To briefly recap what was covered in Part 1 of this series of articles, the transistor was invented during late 1940s and laid the foundation for advanced semiconductor technology. Later, in the end of 1950s and early 1960s, the integrated circuit was invented and enabled more complex and reliable solutions.

However, innovation within the semiconductor industry continued to pick up pace in the years to follow, thus laying the ground for the Microprocessor and DRAM (Dynamic Random Access Memory). But before digging deeper into microprocessors and DRAM, let us return to Robert Noyce. In 1968, Robert Noyce and Gordon Moore (another prominent semiconductor icon who will be discussed later) both left Fairchild Semiconductor and founded what later became Intel (Integrated Electronics). Originally, Intel was named NM Electronics, but the name Intel was purchased shortly after and NM Electronics got rebranded. Fun fact: The name Intel belonged to a hotel chain in the Midwest, from which Noyce and Moore bought the rights to use the name for USD 15,000.

The microprocessor

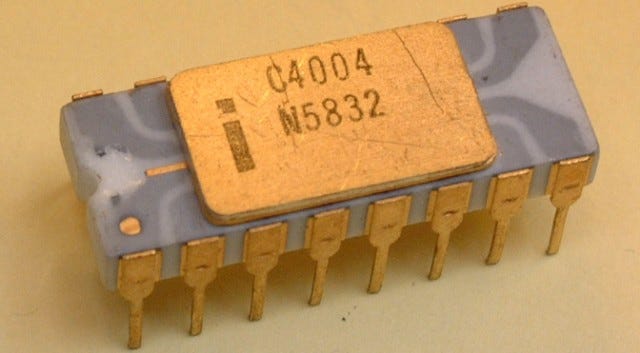

Throughout the 1970s, advancements in semiconductor technology continued at a rapid pace with the development of microprocessors and other types of components. Briefly described, a microprocessor is a single integrated circuit, which contains all the functions of a central processing unit (CPU). The Intel 4004 microprocessor, which was released in 1971, is considered the first commercially available microprocessor. The key engineers behind the development of the Intel 4004 included Federico Faggin, Ted Hoff, and Stanley Mazor.

The Intel 4004 held approximately 2,300 transistors, which can be compared to for example a modern AMD Epyc CPU with 40 billion transistors.

If comparing the Intel 4004 to the first integrated circuit, which was invented about 10 years earlier, the first IC revolutionized electronics by miniaturizing and simplifying circuits. The 4004 on the other hand, took this a step further by enabling complex computing processes, leading to the development of personal computers and modern digital electronics as it could be used in many different types of devices.

In short, the move from the first IC to the Intel 4004 microprocessor was like going from a simple electronic trick to having a mini-computer on a chip, which ultimately unlocked endless possibilities for the continued development of semiconductors and computing.

(For future reference, microprocessors and CPUs are often categorized as “logic” chips, since they are used for logical operations such as computing)

The DRAM

In late 1960’s, IBM’s Robert Dennard began exploring how computers could remember data more efficiently than through the “magnetic cores” that had filled this specific purpose in electronic devices until then. During this time period, a growing demand for more compact, cost-effective, high-performance, and scalable memory solutions had appeared. Robert Dennard’s innovation, the DRAM chip (dynamic random access memory), later came to replace magnetic core memory as the standard for computer memory due to its higher density and lower cost.

However, since IBM tended to develop and use its technological innovations within its own product ecosystem, the commercialization and later widespread adoption of DRAM were mainly driven by companies like Intel.

In the early 1970s, Intel launched its first DRAM chip, the Intel 1103. The company had recognized a major opportunity in producing DRAM chips in high volume, since this type of chip (just like the microprocessor) worked in many different type of devices without having to be tailor made for each one.

A DRAM chip can be described as a chip consisting of tiny buckets (capacitors) that can hold a tiny electrical charge. Each bucket can be filled with electricity to represent a "1" (like saying "Yes") or emptied to represent a "0" (like saying "No"). This way, by checking which buckets are full and which are empty, a computer can read the stored information (the series of 1s and 0s that make up digital data).

The "dynamic" part of “dynamic random access memory” describes the fact that these buckets have tiny leaks. The electrical charge within them starts to drain away slowly. Without being “filled” with new electrical charge they will start losing information. To prevent this the computer regularly goes through each bucket and, through transistors, refills it to the original level if it was supposed to be full, ensuring that no data is lost. This process happens many times per second and is called “refreshing”.

(Now you perhaps wonder how a shut off computer’s SSD memory or a USB stick can remember stored data without being frequently “refreshed”. That is because DRAM is not used for modern long-term data storage. Today, NAND flash memory is used for this purpose, but that is another topic).

Intel, along with others, became a significant player in the DRAM market. However, by the mid-1980s, competitive pressure from Japanese manufacturers, who had gained a dominant position through advanced manufacturing techniques and aggressive pricing, made the DRAM business unprofitable for many market participants, including Intel. Consequently, Intel exited the DRAM market in 1985 to concentrate on its microprocessor business.

Gordon Moore and Moore’s Law

We already know that Gordon Moore was a co-founder of Intel. But Gordon Moore is also known for Moore’s Law, which was a prediction he first made in 1965.

His prediction was based on an observation that the number of transistors (to learn what a transistor is, read Part 1 of our introduction to semiconductors) on a microchip doubles approximately every year. However, in 1975 he revised this to approximately every two years. This observation, or “law”, has since become a driving principle in the semiconductor industry, guiding expectations for technological progress. In its essence, Moore's Law suggests that computing power and computing efficiency will increase exponentially over time, while the cost of computing will decrease.

So why is it important to double the amount of transistors every second year? In short, the more transistors that can fit per chip, the higher computing capacity it gets. Furthermore, the more transistors that can fit on an equally large (or small) chip, the more energy efficient the chip becomes. Simply because the transistor density increases by reducing the transistor size and the space between each transistor, thus also reducing the energy needed to generate the same amount of 1s and 0s.

The continuous shrinking of semiconductor chips is without any doubt one of the most significant achievements in the history of humankind. Without this achievement, we would not have been anywhere close to having invented pocket-sized smartphones, wireless earphones and so on.

A transistor can now be manufactured as tiny as just 50 nanometers (nm), which for example is smaller than most viruses are. To put it into additional context, 1nm is equal to 1 millimeter divided by 1,000,000 (one million).

Is Moore’s Law dead or not?

The question has been up for debate for many years. There are physical limitations to how small transistors can be made. Today’s transistor size is definitely approaching the scale where quantum effects (such as electron tunneling) begin to interfere with their operation. This represents undoubtedly a fundamental barrier to the continuation of Moore's Law.

The cost of developing/designing and manufacturing chips that adhere to Moore's Law is also increasing exponentially, which increases the incentives to find alternative solutions, materials etc.

In the short- to medium-term, however, Moore’s Law is alive and pretty much depends on the continued development of manufacturing technology such as ASML’s Extreme Ultraviolet (EUV) lithography machine.

But that is a topic for another article.

By that, Part 2 has come to an end. If you find what you read useful, smash the like button and share the text with friends and colleagues!